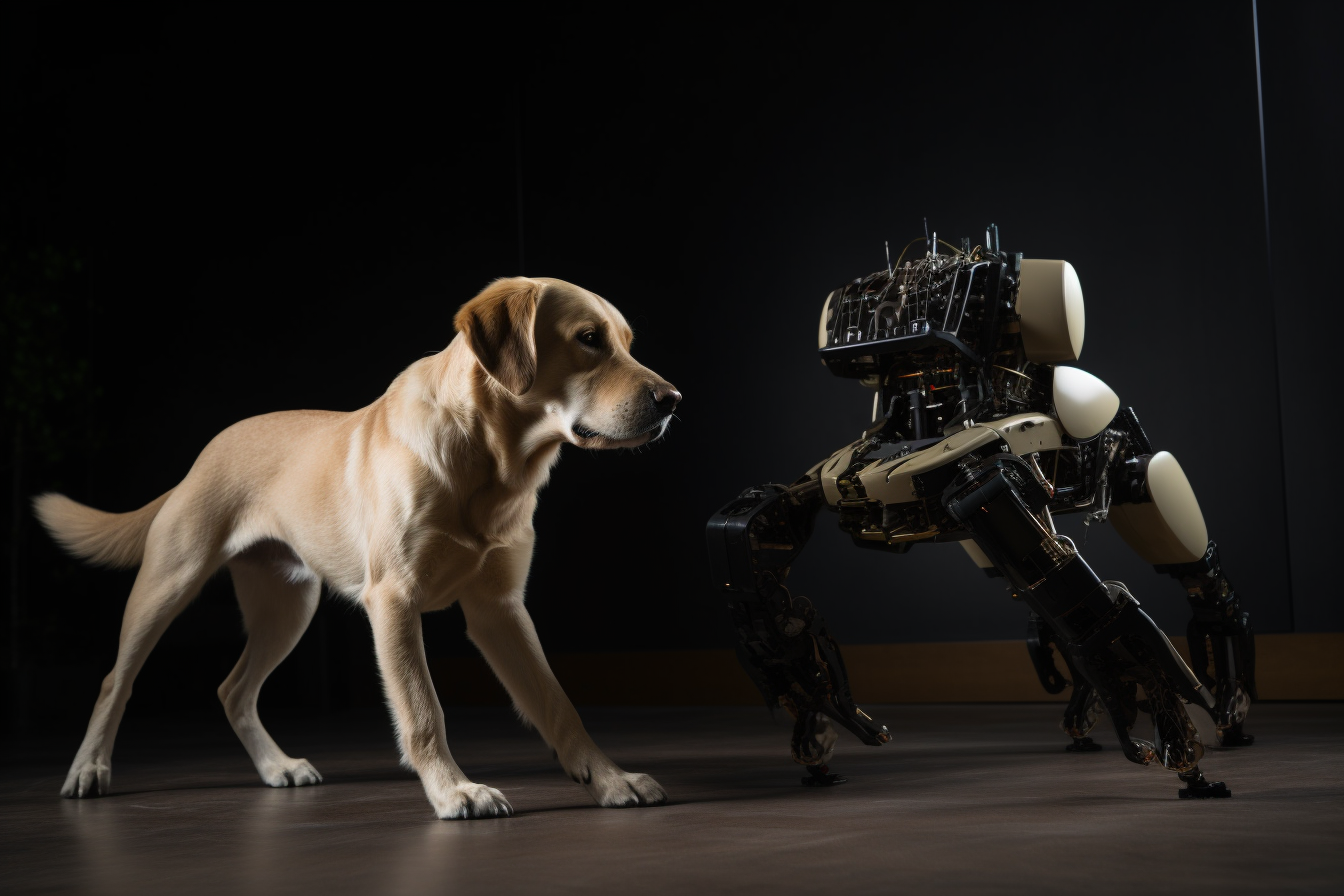

Imagine a future where robots are capable of mimicking the graceful movements of animals and humans with ease. Sounds like science fiction, doesn’t it? However, with the advent of the groundbreaking SLoMo framework, this future may be closer than we think.

What is SLoMo?

SLoMo, short for ‘Skilled Locomotion from Monocular Videos,’ is a revolutionary method that enables legged robots to imitate animal and human motions by transferring these skills from casual, real-world videos. This innovative approach surpasses traditional motion imitation techniques that often require expert animators, collaborative demonstrations, or expensive motion capture equipment.

The SLoMo Framework: A Three-Stage Approach

The SLoMo framework works in three stages:

Stage 1: Synthesis of Physically Plausible Key-Point Trajectory

In the first stage, a physically plausible reconstructed key-point trajectory is synthesized from monocular videos. This involves analyzing the video footage to identify key points that define the motion of the animal or human being imitated.

Stage 2: Offline Optimization of Reference Trajectory

The second stage involves optimizing a dynamically feasible reference trajectory for the robot offline. This includes body and foot motion, as well as contact sequences that closely track the key points identified in the first stage.

Stage 3: Online Tracking using Model-Predictive Controller

In the final stage, the reference trajectory is tracked online using a general-purpose model-predictive controller on robot hardware. This ensures that the robot’s movements are smooth and accurate, even when faced with unmodeled terrain height mismatch.

Successful Demonstrations and Comparisons

SLoMo has been demonstrated across a range of hardware experiments on a Unitree Go1 quadruped robot and simulation experiments on the Atlas humanoid robot. This approach has proven more general and robust than previous motion imitation methods, handling unmodeled terrain height mismatch on hardware and generating offline references directly from videos without annotation.

Limitations and Future Work

Despite its promise, SLoMo does have limitations, such as key model simplifications and assumptions, as well as manual scaling of reconstructed characters. To further refine and improve the framework, future research should focus on:

1. Extending the Work to Use Full-Body Dynamics

The first area for improvement is extending the work to use full-body dynamics in both offline and online optimization steps. This will enable more accurate and realistic motion imitation.

2. Automating the Scaling Process

Automating the scaling process and addressing morphological differences between video characters and corresponding robots is also essential for further development.

3. Investigating Improvements and Trade-Offs

Investigating improvements and trade-offs by using combinations of other methods in each stage of the framework, such as leveraging RGB-D video data, can help optimize the performance of SLoMo.

4. Deploying on Humanoid Hardware

Deploying the SLoMo pipeline on humanoid hardware, imitating more challenging behaviors, and executing behaviors on more challenging terrains will push the boundaries of what is possible with this innovative framework.

Conclusion

As SLoMo continues to evolve, the possibilities for robot locomotion and motion imitation are virtually limitless. This innovative framework may well be the key to unlocking a future where robots can seamlessly blend in with the natural world, walking, running, and even playing alongside their animal and human counterparts.

Authors

- John Z. Zhang

- Shuo Yang

- Gengshan Yang

- Arun L. Bishop

- Deva Ramanan

- Zachary Manchester

Affiliation

Robotics Institute, Carnegie Mellon University

References

https://arxiv.org/pdf/2304.14389.pdf